Overview

In this building block, you will delve deep into the inner workings of XGBoost, a top-tier machine learning algorithm known for its efficiency and effectiveness.

Navigating through this guide, you will gain hands-on experience and broaden your understanding of:

- The ‘under the hood’ process of how XGboost works.

- How to use XGBoost in

R, what the hyperparameters are, and how to tune them using a grid search. - How to interpret the results.

- How to visualize the algorithm.

Step into the world of XGBoost, where you’ll learn to build, fine-tune, and interpret highly reliable models, ready to make impactful predictions across various industries.

What is XGBoost?

Extreme gradient boosting is an highly effective and widely used machine learning algorithm developed by Chen and Guestrin 2016. The algorithm works by iteratively building a collection of decision trees, where newer trees are used to correct the errors made by previous trees (think of it as taking small steps in order to come closer to the “ultimate truth”). Additionally, the algorithm use L1 (Lasso regression) and L2 (Ridge regression) regularization terms in its cost function, and a penalizing term to prevent overfitting and complexity of the model.

This method has proven to be effective in working with large and complex datasets. Also it is famous to handle sparse datasets. XGBoost provides a parallel tree boosting that solve many data science problems such as classification, regression, and recommendation in a fast and accurate way (for example, at the KDDCup in 2015, XGBoost was used by every team ranked in the top 10!).

How does XGBoost work?

The algorithm starts by calculating the residual values for each data point based on an initial estimate. For instance, given the variables age and degree, we compute the residual values relative to salary(target variable), for which the value 49 will serve as our initial estimation:

| age | degree | Salary | Residual |

|---|---|---|---|

| 20 | yes | 40 | -9 |

| 28 | yes | 46 | -3 |

| 31 | no | 50 | 1 |

| 34 | yes | 54 | 5 |

| 38 | no | 61 | 12 |

Next, the algorithm calculates the similarity score for the entire tree and the individual splits, especially focusing on an arbitrarily chosen mean value of 24 (the mean between the first two age values) using the following formula:

Warning

Warningλ is a regularization parameter which is used to prune the tree. For now, we set it to the default value of 1

Following the calculation of the individual similarity scores, the gain is calculated as:

The algorithm then compares this value to gain-values generated by other split options within the dataset to determine which division optimally enhances the separation of classes. For example, when we choose the split value of 29.5 (the mean value between the second and third ages), the similarity score of the root node remains unchanged, while the similarity scores of the left and right nodes change to:

The gain-value therefore becomes:

Which is a higher value than that of the split based on our initial values, and is therefore used as the more favorable option. The same process is then repeated for all possible split combinations in order to find the best option. After this process is completed for all possible split options the final tree (with the residual values) becomes:

The ouput value for each data point is then calculated via the following formula:

Warning

Warningɑ is the learning rate of the model, for now we set it to the default value of 0.3

From this we can see that our output value for the average salary of a 20 year old has decreased from the initial prediction of 49 to our new prediction of 47.2, which is closer to the true value of 40! An overview of all output values can be found below, from these output values the new residual values Res2 are constructed. Therefore we can conclude that the average residual has decreased!

This process is then repeated until the model cannot improve anymore

| Salary | age | degree | Residual | Output | Residual 2 |

|---|---|---|---|---|---|

| 40 | 20 | yes | -9 | 47.2 | -7.2 |

| 46 | 28 | yes | -3 | 47.2 | -7.2 |

| 50 | 31 | no | 1 | 50.95 | -0.95 |

| 54 | 34 | yes | 5 | 50.5 | 3.5 |

| 61 | 38 | no | 12 | 50.95 | 10.05 |

An additional factor we need to take into account is gamma (γ), which is an arbitrary regularization parameter that controls the complexity of individual trees in the boosting process. It is a measure of how much an additional split will need to reduce loss in order to be added to the collection. A higher gamma value encourages simpler trees with fewer splits, which helps to prevent overfitting and lead to a more generalized model. A lower gamma, on the other hand, allows for more splits and potentially more complex trees which may lead to better fit on the training data but could increase the risk of overfitting.

The tree is pruned when:

In this case the branch is removed. In other words the gain-value always needs to be higher than gamma in order for a split to be used.

Using XGBoost in R

You can get started by using the xgboost package in R.

In what follows, we use this dataset from Kaggle for illustration. With this dataset we will try to predict the house price based on a number of features (e.g., number of bedrooms, stories, type of building etc).

In order to work with the algorithm you first need to install the XGBoost package, load the data and remove some columns via the following commands:

#install the xgboost package

install.packages("xgboost")

# Load necessary packages

library(xgboost)

library(tidyverse)

library(caret)

# Open the dataset

data <- read.csv("../all_v2.csv", nrows = 2000)

#remove columns

data <- data %>%

select(-date, -time, -geo_lat, -geo_lon, -region)

Warning

WarningBecause XGBoost only works with numeric vectors, so you need to convert the categorical values into numerical ones using one-hot encoding or any other approaches.

Luckily, this is straightforward to do using the dplyr package. One-hot encoding is a process that transforms categorical data into a format that machine learning algorithms can understand. In the code below each category value in a column is transformed into its own column where the presence of the category is marked with a 1, and its absence is marked with a 0.

data <- data %>%

mutate(building_type_1 = as.integer(building_type == 1),

building_type_2 = as.integer(building_type == 2),

building_type_3 = as.integer(building_type == 3),

building_type_4 = as.integer(building_type == 4),

building_type_5 = as.integer(building_type == 5)) %>%

select(-building_type)

Now that we have one-hot encoded our data we separate the target variable price from the input variables. Subsequently, the data is divided into training and testing sets using an 80/20 split ratio. Finally the data is converted into separate data matrices in order for XGBoost to use it.

#separate target variable (price) from input variables

X <- data %>% select(-price)

Y <- data$price

set.seed(123) #set seed for reproducibility

train_indices <- sample(nrow(data), 0.8 * nrow(data)) #sample 80% for training set

X_train <- X[train_indices, ] #extract features for training set

y_train <- Y[train_indices] #extract target values for training set

X_test <- X[-train_indices, ]

y_test <- Y[-train_indices]

#convert both sets to a DMatrix format, in order for xgboost to work with the data

dtrain <- xgb.DMatrix(data = as.matrix(X_train), label = y_train)

dtest <- xgb.DMatrix(data = as.matrix(X_test), label = y_test)

Hyperparameters

Now that we have created our training data and test data we need to set up our hyperparameters (think of these as our knobs and handles for the algorithm). These hyperparameters for the XGBoost algorithm consist out of:

max_depth: Specifies the maximum depth of a tree.min_child_weight: Minimum sum of instance weight needed in a child to further split it.gamma: Regularization term. Specifies a penalty for each split in a tree.eta: Learning rate, which controls the weights on different features to make the boosting process more conservative (or aggressive).colsample_bytree: Fraction of features sampled for building each tree (to introduce randomness and variety).objective: Specifies the object (regression, or classification).eval_metric: Specifies the model performance (e.g. RMSE).nrounds: Number of boosting rounds or trees to be built.

Let us now (arbitrarily) set up some hyperparameters:

params <- list(

max_depth = 4,

min_child_weight = 1,

gamma = 1,

eta = 0.1,

colsample_bytree = 0.8,

objective = "reg:squarederror", # Set the objective for regression

eval_metric = "rmse", # Use RMSE for evaluation

nrounds = 100

)

Tip

TipYou can also use objective = binary:logistic for classification.

With the parameters specified we can now pass them to the xgb.train command in order to train the model on the dtrain data!

xgb_model <- xgb.train(

params = params,

data = dtrain,

nrounds = params$nrounds,

early_stopping_rounds = 20, # stop iteration when there test set does not improve for 20 rounds

watchlist = list(train = dtrain, test = dtest),

verbose = 1 # print progress to screen

)

Finally, let us make some predictions on the new dataset, view the original prices and the predicted prices next to each other, and assess the Mean Absolute Error (MAE).

predictions <- predict(xgb_model, newdata = dtest)

results <- data.frame(

Actual_Price = y_test,

Predicted_Price = predictions

)

mae <- mean(abs(predictions - y_test))

mae

As can be seen the predictions of the algorithm and the original prices still differ significantly (MAE is almost 2 million). A possible reason for this could be that the hyperparameters were arbitrarily chosen. In order to perform a more structured way to choose our hyperparameter we can perform a grid search.

Hyperparameter optimization using grid search

Selecting the correct hyperparameters can prove to be quite a time-consuming task. Fortunately, there exists a technique in the form of grid search that can help alleviate this burden.

Utilizing a grid search involves selecting a predefined range of potential hyperparameters for examination. The grid search systematically tests each specified combination, identifying the set of parameters that yield the highest level of optimization. Additionally, we make use of a 5-fold cross validation in order to robustly assess the models performance across different subsets of the data.

ctrl <- trainControl(

method = "cv", # Use cross-validation

number = 5, # 5-fold cross-validation

verboseIter = TRUE, # print progress messages

allowParallel = TRUE # allow for prarallel processing (to speed up computations)

)

Following which we define the hyperparameter grid:

hyper_grid <- expand.grid(

nrounds = c(100, 200),

max_depth = c(3, 6, 8),

eta = c(0.001, 0.01, 0.1),

gamma = c(0, 0.1, 0.5),

colsample_bytree = c(0.6, 0.8, 1),

min_child_weight = c(1, 3, 5),

subsample = c(1)

)

In order to run the grid search we run the following code. With X and Y representing the input features and the target variable, respectively (this could take some time).

# Perform grid search

xgb_grid <- train(

X, Y,

method = "xgbTree", # specify that xgboost is used

trControl = ctrl, # specify the 5-fold cross validation

tuneGrid = hyper_grid,

metric = "RMSE" # specify the evaluation method

)

# Print the best model

print(xgb_grid$bestTune)

from which the output is:

| nrounds | max_depth | eta | gamma | colsample_bytree | min_child_weight | subsample | |

|---|---|---|---|---|---|---|---|

| 445 | 100 | 8 | 0.1 | 0 | 1 | 1 | 1 |

indicating the following:

nrounds= 100 means that the training process will involve 100 boosting rounds or iterations.- The value of 3 for

max_depthmeans that the maximum depth of each decision tree in the ensemble was limited to 8 levels. eta= 0.1 indicates that a relatively small learning rate was applied making the model learn slow (but steady!)- the value of 0 for

gammameans that there was no minimum loss reduction required to make a further split. In other words, the algorithm could split nodes even if the split didn’t lead to a reduction in the loss function. colsample_bytree= 1 indicated that all available features are considered during training- a new split in a tree node was required to have a minimum sum of instance weights of 1, as indicated by

min_child_weight - the full

subsamplewas used

Assessment

for the full set of combinations the command below can be used. This will also return all other indicators like RMSE, R2, MAE, R2SD, MAESD.

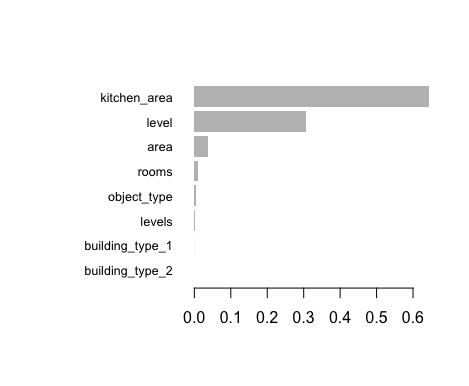

Additionally, the importance of individual features can also be assessed. This can be done via the following code:

importance_matrix <- xgb.importance(feature_names = colnames(X), model = xgb_model)

xgb.plot.importance(importance_matrix)

Which results in the following graph, from which it can be see that kitchen_area is the most important feature in predicting house prices.

Visualization

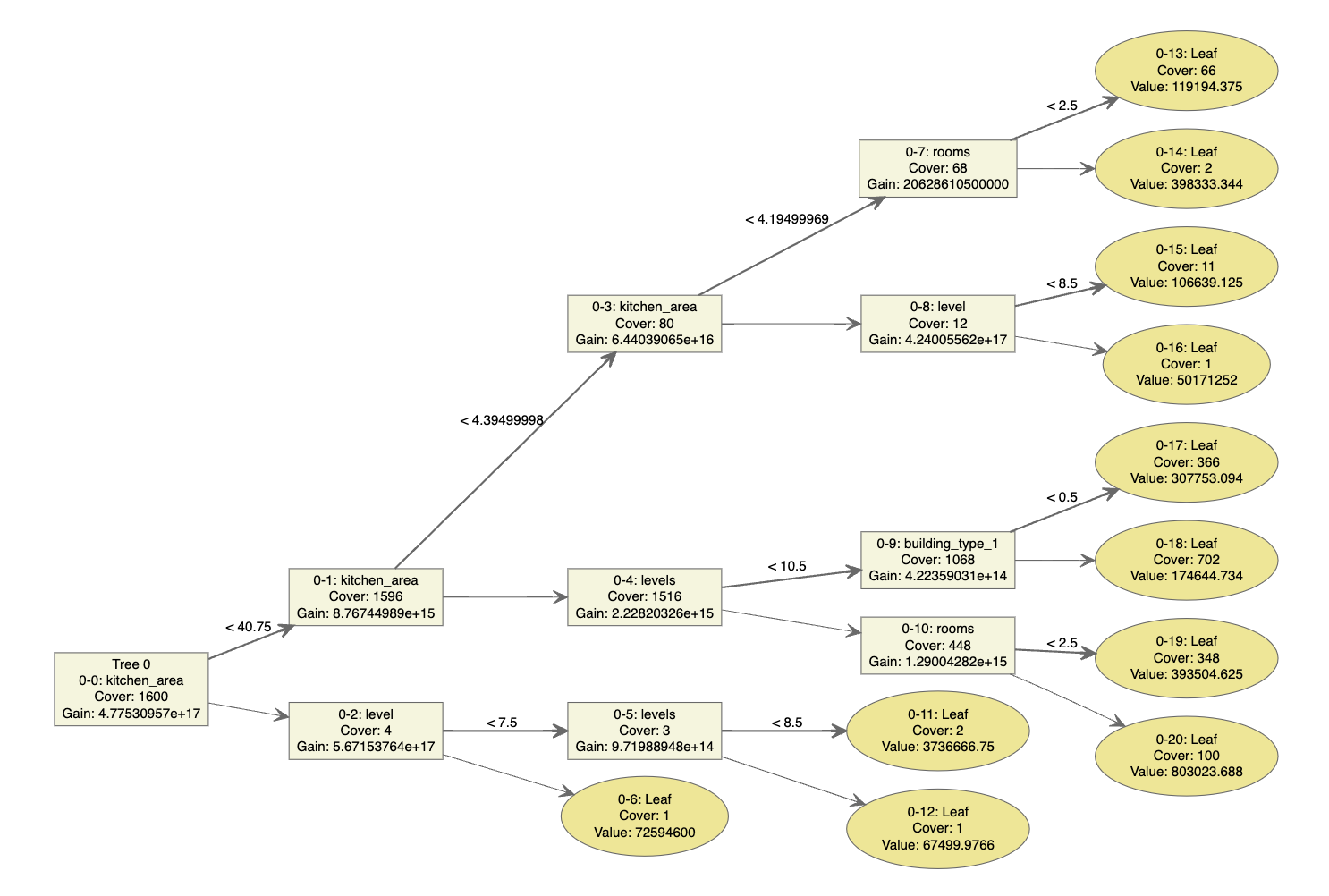

In order to visualize the ensemble of created trees (using the hyperparameters found in the grid search), let’s run the next line of code:

xgb.plot.multi.trees(feature_names = names(data),

model = xgb_model) #the size of this plot can be adjusted by limiting the max_depth variable

This code will return the entire ensemble of trees in the model, and is intended to improve the interpretability of the model. The numbers between the brackets are the splits where the tree changes its value (so the first tree has its split for the feature hotwaterheating at 3.76e+14).

To better understand the model’s inner workings, the xgb.plot.tree function can be useful. It allows plotting individual trees rather than the entire ensemble, and allows you to look trough the different trees generated by the algorithm.

xgb.plot.tree(model = xgb_model,

trees = 0, #you can iterate trough the different trees by adjusting this value

show_node_id = TRUE)

From the graph that follows we can see that kitchen_area is used to make the first split in the first tree. The values 0 or 0-2 are the node_id of the leaves themselves. Furthermore the ovals indicate terminal nodes, while the square boxes are internal nodes.

Additionally:

- Cover indicates the number of instances in a given branch, when this is low it indicates that decisions are made on few data points (which could lead to overfitting).

- Gain is a measure of the improvement in accuracy, a higher gain value thus indicates a more important feature.

- Value is the predicted score or output value that is used to make the final prediction.

Tip

TipThis article is only a small introduction on XGBoost, and there are many more resources available on the internet such as:

Summary

Summary-

Understanding XGBoost:

- Dive into the mechanics of XGBoost’s functionality.

- Explore its application within the

R.

-

Setting Up and Tuning the Algorithm:

- Define and understand the hyperparameters.

- Employ a systematic grid search for optimal model performance.

-

Interpreting and Visualizing Outcomes:

- Analyze model results for meaningful insights.

- Visualize your algorithm’s efficacy and decision process.